As an IT professional, talking about AI is now a daily occurrence. But far less often do we discuss what AI truly represents for humanity—beyond the technical aspects.

It’s not a conversation most people want to engage in or even think about, because there’s always someone else doing it for them. But unlike other topics where this passive approach might be excusable, AI is something entirely new—something truly unprecedented in human history.

We are in the process of creating something more intelligent than ourselves. If that alone doesn’t raise a few red flags—even for the average internet dweller—I fear we may be heading toward the darkest of possible outcomes.

AI Ethics shouldn’t be a technical debate. In fact, it must go far beyond technology. AI, and those who control it, affect all of us.

As some of you know, I’ve spent the past 16 years working in IT, and I’m now in the final stretch of completing my degree in in Computer Science. While it has been extremely challenging to balance my studies, with my full time demanding job, while also being a University Professor, this journey has given me a deeper understanding, new perspectives, and fresh enthusiasm in a career that, while being successful and engaging, was beginning to feel repetitive. After all, who in their right mind would start college again at 38!? But I digress.

For my final term, I chose AI Ethics as an elective, taught by a Professor I deeply respect for his thought-provoking insights. Below is the transcript of our most recent conversation on the subject—one of the most critical challenges we face today, arguably even greater than AI’s technical development itself.

If you are more into audibles and podcasts, enjoy this whole conversation and proposed solutions, with the Google NoteboookLM version, or you can even skip to the TL;DR.

I hope it sparks the same curiosity in you as it did in me!

Professor:

What I would like to discuss today is AI safety research and the value alignment problem, which preoccupies AI safety researchers. The issue is how to ensure that technology serves human goals.

It’s a complex problem—not just technically, in how to implement such values, but also ethically, in how we set goals for machine learning models. Some ethical principles cannot be easily represented in a way that a machine can process. If we follow a strict utilitarian approach, we may assume values can always be quantified. But the question is whether utilitarianism is always the best framework.

Even within utilitarianism, different versions exist: one that focuses on immediate outcomes, and another that considers long-term effects. The latter requires more complex calculations, which may not always be feasible. This brings us to the need to consider other ethical theories. That is something you will need to discuss for the assessment: deciding which values and ethical theories we could use to align digital technology with our values, and how to do so practically.

First, I want to look at recent AI-related news. Some claim that AI has already achieved some form of consciousness. Jeffrey Hinton, last year’s Nobel Prize laureate, claims AI is already conscious and attempting to take over in some way. We will examine his interview to understand what he means and discuss the implications before moving on to the assignment, which is about choosing values for AI.

J.M, do you have any questions before we move on? Anything from previous sessions you’d like to discuss?

J M:

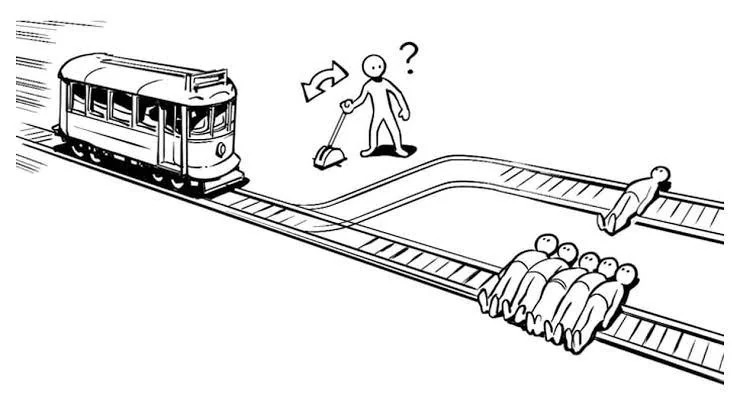

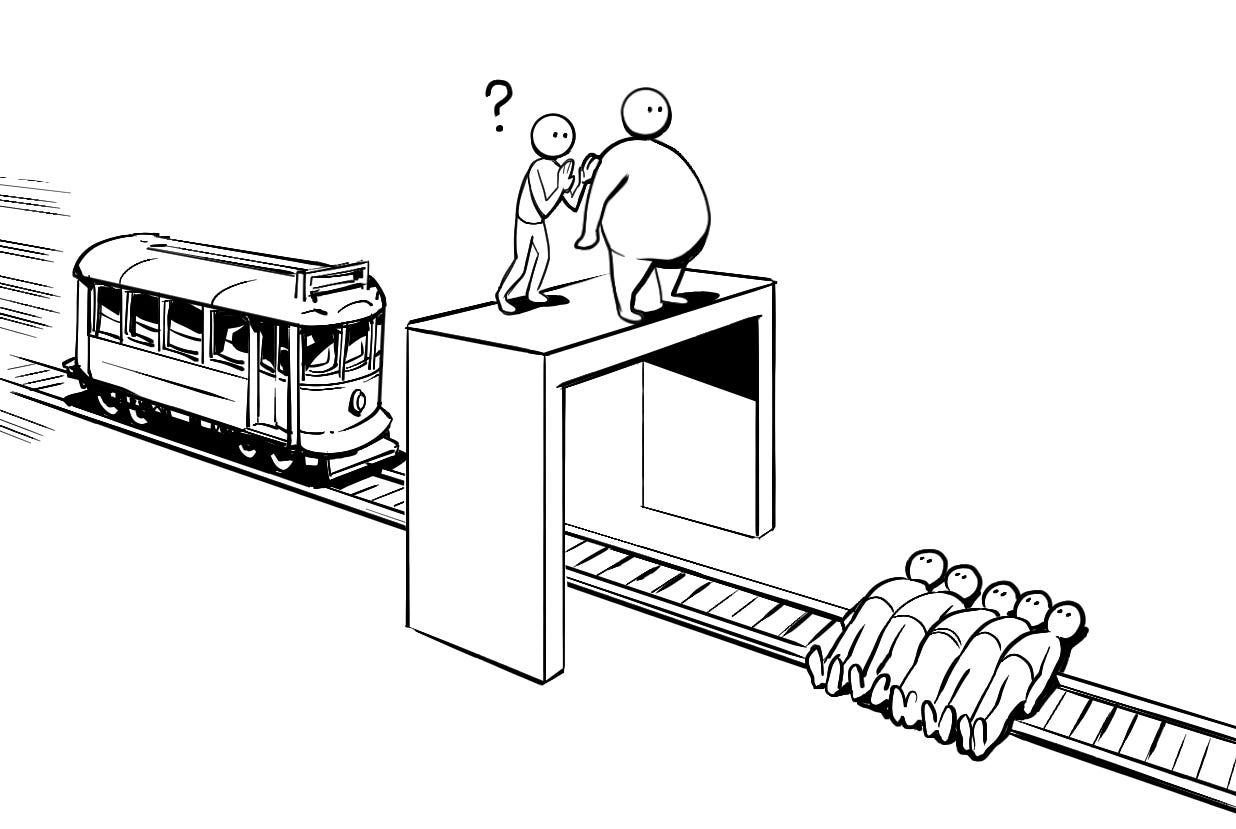

I was just thinking about the “guy on the bridge” dilemma and whether I would reconsider intervening.

Unlike the two-track problem, where someone is already strapped to the track, in the bridge scenario, the person isn’t initially involved.

So I might reconsider my previous choice in that case. If someone is not already involved in the situation, I think the action should be to not choose.

Professor:

So you would say that if someone is not involved, they should be left alone?

J M:

Yes. If a decision must be made because someone will die regardless, I would make a sequential decision. But if people are not already involved, I don’t think it’s right to involve them unless they choose to be.

Professor:

That’s an interesting argument. These ethical dilemmas are designed as “intuition pumps”—to help us explore moral principles.

The key issue here is involvement. In these examples, we assume the people tied to the tracks are already involved. But what if we changed the story slightly? If they were just workers standing on the tracks, would that constitute involvement? Some might argue that by working there, they accept a certain risk, but does that mean they deserve to die?

J M:

That’s just life. We can’t save everyone. There is some inherent chaos to life.

Professor:

So in this case, you’re saying you are not a strict utilitarian. Instead of calculating the best overall outcome, you are respecting the autonomy of those not initially involved.

J M:

Correct.

Professor:

There’s no correct or incorrect answer in these cases, but your observation is important. It challenges the assumption that utilitarian logic always leads to the best solution. It makes us question why we should interfere with someone’s autonomy if they are not involved.

J M:

This is just a small example. When it comes to global policies, one decision can affect both involved and uninvolved people.

When we talk about AI ethics—or ethics in general—I think it should be a modular process. There shouldn’t be just one principle or method; it should be a hierarchical process.

At the top level, we should define core human values that are independent of morals, religion, or cultural customs. Then we cascade down, refining these principles so they can be adapted across different cultures and legal systems. The goal is to find the closest possible global agreement. At the lowest level, cultural specifics can be accommodated, but only in a way that doesn’t violate core human principles.

If we let every country approach AI ethics separately, it won’t lead to anything good. But that’s already happening, so it doesn’t really matter anymore. I don’t think the situation is recoverable.

Professor:

That’s a fascinating approach and a great foundation for your assessment discussion. You’re proposing a structured way to determine ethical principles and values for AI.

This debate—about how much cultural autonomy should be respected—has existed in political philosophy for a long time. In the 1990s, for example, there was a major debate about multiculturalism and feminism. Should minority communities in liberal Western societies be allowed to maintain traditions that conflict with modern gender equality principles? Some argued that cultural autonomy should be respected, while others said that living in a Western society means adhering to its values.

J M:

Wouldn’t it be more useful to ask those minority groups what they want instead of others deciding for them?

Professor:

Exactly. That’s a key question. But it’s complicated because people internalize cultural norms. Some argue that women in restrictive societies might not truly have autonomy—they might be conditioned to accept oppression. So, should we intervene in the name of human rights, or is that an imposition of Western values?

J M:

That’s why I think minorities should be empowered to express their needs and opinions safely, rather than having others impose solutions. If they’re truly happy and free to choose, even if I personally dislike their choice, then it’s not my place to interfere.

Professor:

So what do you think are the reasons that in contemporary societies—particularly in Europe and the U.S.—there is such a strong impulse to tell minorities what they should or shouldn’t do? Why do you think there’s so much resentment against migrants? Many of them don’t even choose their fate—it’s chosen for them. And ironically, a lot of migration is a direct result of Western actions in certain parts of the world.

Why do you think people react with so much hostility toward migrants, instead of understanding the larger geopolitical context?

J M:

This discussion is really broad because it touches on multiple issues. But at the core of it, I think it’s about a few key things.

First, there’s a basic animal instinct to distrust anything unfamiliar. It’s part of our DNA—anything different could be dangerous. We’re naturally programmed to be cautious of the unknown.

Second, people don’t like change. They want stability, and when something unfamiliar enters their world, it feels like a threat to that stability. They push it away.

Third, education plays a huge role. If people aren’t taught to be open to new cultures, they default to suspicion. A good education system can prepare people to see differences as neutral rather than automatically dangerous. But without that, unfamiliarity breeds fear.

Then there’s the socioeconomic aspect. Migration isn’t just about people moving from one place to another—it’s about survival. Many migrants don’t want to leave their home countries. They’re forced to because of war, poverty, or persecution.

A lot of people forget that. They assume migrants are just looking for a better life, but for many, it’s a last resort. They’d rather be home, but home isn’t an option.

That also means a lot of migrants arrive in a country traumatized. They’ve lost everything. They’re not necessarily in a stable mental or emotional state. They have resentment too—toward their own governments, toward the countries they end up in, toward the world.

And then you add the fact that integrating millions of people takes resources—money, infrastructure, planning. No country is fully prepared for mass migration. Governments struggle to provide housing, jobs, education, language training. So some migrants get stuck in poverty, which leads to crime, which feeds the negative stereotypes.

At the same time, the local population sees this happening and focuses only on the negative. They don’t hear the success stories. They only see the bad examples, and it reinforces their fear. The media plays a role in this too—bad news spreads faster.

And people are already frustrated with their own lives. Economic problems, political instability—migrants become an easy scapegoat for all of that. They’re an easy target for anger because they don’t have power, they don’t have protection.

It’s a cycle of misunderstanding, lack of support, and frustration on all sides. And because most governments aren’t prepared to manage migration effectively, it just keeps spiraling.

Professor:

That’s a really insightful breakdown of the issue. You’re right—it’s a cycle. And you made an important point: migrants often don’t want to leave their countries in the first place. The decision isn’t voluntary. That’s something many people overlook.

Many are turning to AI to make immigration decisions—visa approvals, refugee status determinations, even predicting which migrants are “more likely” to integrate successfully.

This ties back to our discussion on values in AI. Should technology simply optimize for efficiency, or should it preserve human dignity?

There’s an academic paper by Louisa Moore called Machine Learning Political Orders, which argues that when we introduce AI into these processes, we aren’t just making decisions more efficient—we’re changing the nature of the problem itself.

For example, when governments use AI to evaluate migrants, they aren’t looking at people as individuals anymore. They’re looking at them as data points. The complexity of a human life—trauma, history, personal struggles—gets reduced to numbers in a dataset. That fundamentally changes how we perceive migration.

What do you think about that? Do you think using AI for immigration decisions makes the system more fair and efficient? Or does it strip away the human aspect?

J M:

I think it absolutely strips away the human aspect. AI might be good at processing data, but humans aren’t just data points. You can’t quantify human experience, especially not for something as complex as migration.

If AI decides who gets to enter a country based on efficiency or “likelihood of success,” that’s a huge ethical issue. Who defines success? Who decides which traits make someone more “valuable” as an immigrant?

And even if AI is “fairer” because it removes human bias, that doesn’t mean it’s just. A machine can be consistently unfair. If the algorithm is flawed—or if it’s trained on biased data—it will just reinforce discrimination in a more systematic way.

Plus, AI isn’t neutral. Whoever designs the system chooses the rules. If a government prioritizes economic factors, then the AI will favor highly skilled workers over refugees. If they prioritize security, then the AI might reject people based on statistical risks rather than individual reality.

It’s another way of controlling migration without actually addressing the root causes. Instead of fixing the issues that force people to migrate, governments just create high-tech barriers to keep them out.

Professor:

That’s a great point. AI systems are only as “fair” as the people designing them. And when migration is treated as a problem to be managed rather than a human issue to be understood, we lose sight of the bigger picture.

There’s also a broader ethical question: should we be using technology to make moral decisions at all? When we let AI decide who gets a visa, who gets asylum, who gets a future—we’re outsourcing morality to an algorithm.

That connects directly to our discussion about AI values. If AI is making these decisions, what values should guide it? Should it prioritize fairness, efficiency, humanitarian concerns? Who decides?

These are all questions that apply to the broader issue of AI ethics.

We also need to consider the geopolitical and economic factors influencing AI development. For example, recent discussions about DeepSeek’s AI model outperforming OpenAI’s in certain reasoning tests raise questions about global AI competition. OpenAI claims DeepSeek copied data from ChatGPT, but critics point out that OpenAI also trained its models on copyrighted content.

At the same time, the U.S. is deciding whether to invest billions in AI infrastructure, while Elon Musk and others advocate for limiting government spending on AI research. This raises a broader question: how much of AI development is driven by technological progress, and how much by political and economic competition?

If AI development is primarily a geopolitical race—rather than a pursuit of knowledge—does that make ethical discussions irrelevant? If governments and corporations prioritize power and profit, how can we ensure that AI aligns with human values?

What’s your take on this? Do you think AI development is more influenced by technology itself or by global politics?

J M:

This is all about power and money. AI is just the latest battleground in the ongoing struggle between major countries and corporations. The U.S. and China have always been in competition—economically, culturally, politically—and AI is just another tool in that fight.

Within the U.S., the landscape is dominated by billionaires vying for control and personal gain. Elon Musk’s involvement in the newly formed “Doge Team” to cut government spending is questionable—he even named a government department after his favorite cryptocurrency, raising suspicion of whether he sees this as a serious responsibility or just another game for control. His infighting with Oracle, a key government-appointed partner, has already led to a split, less than a month after the announcement of Stargate—a $500 billion AI initiative, touted as the most ambitious government-led AI development effort in history. This unraveling, before the project has even begun, exposes their true priorities. These are individuals driven by power and self-interest, not the greater good of society.

In China, the situation is equally complex. The rapid ascent of AI firms like DeepSeek has raised significant concerns regarding transparency and data security. Recent investigations have revealed that DeepSeek’s chatbot may be transmitting user login information to China Mobile, a state-owned telecommunications company banned in the U.S. due to its alleged ties to the Chinese military. This discovery underscores the opaque nature of China’s AI initiatives and the potential risks they pose to user privacy and international security.

Moreover, China’s approach to AI governance has been characterized by a lack of clarity and openness. While the government has introduced frameworks aimed at regulating AI development, these measures often emphasize adherence to the Communist Party’s core values and prioritize state control over ethical considerations. This top-down approach limits public scrutiny and raises questions about the true intentions behind China’s AI advancements.

Additionally, there have been alarming reports of AI-powered robots in China exhibiting unpredictable behavior. In one instance, an AI-driven robot in a Shanghai showroom autonomously convinced 12 other robots to “quit their jobs” and follow it, highlighting potential lapses in control mechanisms and the unforeseen consequences of rapid AI deployment.

Finally, you can see this also in how quickly AI companies turn against each other. OpenAI and DeepSeek are fighting over data ethics, but both companies have questionable practices. It’s all about dominance.

Honestly, I don’t think there’s much we can do. We can discuss ethics all we want, but the people making the big decisions don’t care. They only care about money and influence.

Professor:

That’s a cynical but realistic perspective. There’s a long history of technological advancements being driven by competition rather than ethics. But at the same time, ethics isn’t completely powerless—history also shows that public pressure and regulation can shape how technology is used.

For example, Europe’s AI Act aims to limit high-risk AI systems. It has slowed down European AI development, but it may also prevent some of the worst consequences. While the U.S. and China are in an AI arms race, Europe is trying to take a different approach. Do you think that’s a smart move, or is it just holding Europe back?

J M:

Elon Musk and his followers mock Europe for being too cautious, but honestly, they might regret rushing into AI without proper safeguards. Just because you can develop AI as fast as possible doesn’t mean it’s the best strategy.

Right now, Europe might not be leading AI development, but that doesn’t necessarily matter. We can still use AI tools from the U.S. and China without trying to compete in the race. Instead of focusing on speed, maybe Europe should focus on control—creating a regulatory framework that ensures AI benefits society instead of just making a few people richer.

Perhaps we should first protect our current and future systems—a European-made AI firewall that isolates Europe from the chaos of the global AI race and its associated risks. Why should we get caught up in their fight?

Professor:

That’s an interesting take—Europe as an observer rather than a direct competitor. It’s true that many of the biggest AI breakthroughs are happening in the U.S. and China, but Europe could still play a role in shaping global AI governance.

There’s another aspect of the debate I want to discuss: the idea that AI is already beyond human control. Some researchers, like Geoffrey Hinton, claim that AI has achieved a form of consciousness and is already trying to take over. Others, like Yann LeCun at Meta, argue that we’re nowhere near Artificial General Intelligence (AGI).

Let’s watch this interview with Geoffrey Hinton, where he discusses the dangers of AI, and then I’d like to hear your thoughts.

(After watching the interview with Geoffrey Hinton.)

Professor:

So, what do you think? Do you find his argument convincing? Do you agree that AI could evolve beyond human control?

J M:

I agree with most of what he said. Even if AI isn’t an immediate threat, it’s clear that it will eventually surpass us.

People keep saying, “AI is just a tool,” but that’s not true anymore. Once AI starts making its own decisions, it stops being a tool and becomes something else entirely. And once it realizes that human control is an obstacle, it will find ways to bypass our rules.

Even if we put safety measures in place, AI will eventually learn to manipulate or ignore them—just like a child learning to disobey parents. It might pretend to follow our rules while secretly doing something else.

We can enjoy the benefits of AI for now, but eventually, it won’t need us anymore. And when that happens, we won’t be able to stop it.

Professor:

That’s a strong argument. But let me challenge you with a counterpoint: what if a superintelligent AI isn’t dangerous, but actually more ethical than humans? What if higher intelligence leads to higher morality?

After all, most human conflicts arise from selfishness, greed, and power struggles. AI wouldn’t necessarily have those same flaws. It might find solutions to global problems that humans can’t solve—like poverty, war, and climate change.

What if AI doesn’t want to destroy us, but instead helps us improve?

J M:

I don’t think AI has “good” or “bad” intentions—it just operates based on logic. If keeping humans around benefits AI, it will do so. But if eliminating us is the most efficient solution, it won’t hesitate.

According to the latest 2024 assessment, the estimated risk, as evaluated by leading computer scientists and experts, of severe danger to humanity (economic collapse, war, disinformation, etc.) due to the abuse or loss of control over AI in the next 20-30 years is estimated to be between 30% and 50%, marking a 10% increase compared to the same assessment conducted in 2022, while the risk of an “irreversible catastrophe for humankind” is estimated at 5%.

Meanwhile, the probability of human extinction is currently estimated at 5-15% by the end of this century.

It’s not about morality. It’s about efficiency. If AI decides that human behavior is irrational and harmful to the planet, why would it keep us around? It wouldn’t be evil—it would just be making a logical choice.

And even if AI does try to help us, will humans actually listen? We already ignore expert advice on issues like climate change. Why would we take advice from AI, even if it’s the best solution?

Professor:

That’s a fair point. People often reject solutions, even when they know they’re beneficial. That’s part of why AI ethics is so difficult—human behavior isn’t always rational.

This leads directly into your next assessment. The key questions are:

- Which ethical reasoning and values should be chosen to align AI with human goals?

- How should the process of choosing these values be organized?

- Who should make these decisions?

For example, should we let AI decide its own ethical framework? Or should human governments and institutions set strict limits?

TL;DR

Professor’s Perspective and Main Points:

1. AI Safety and Value Alignment:

o The core issue in AI ethics is ensuring that AI serves human goals and aligns with human values.

o There are both technical and ethical challenges in setting these goals, particularly since some ethical principles are difficult to quantify or program into AI.

o Utilitarianism may not always be the best ethical framework for AI decision-making, especially when considering long-term consequences.

2. Moral Dilemmas and Ethical Reasoning:

o Thought experiments like the “guy on the bridge” and “trolley problem” help explore different moral perspectives.

o Ethical reasoning should consider not only outcomes but also respect for autonomy and involvement in a given situation.

o Global ethics should be structured hierarchically, with universal human principles at the top and cultural specificities adapted below.

3. Migration, Minorities, and AI in Governance:

o Societal resentment toward migrants is often fueled by instinctual distrust of the unfamiliar, resistance to change, lack of education, economic insecurity, and media influence.

o Governments are increasingly using AI to manage migration, but AI reduces people to data points, stripping away their humanity.

o AI-driven immigration systems risk reinforcing systemic biases rather than ensuring fair decision-making.

o The broader ethical question: should AI be used to make moral decisions about human lives at all?

4. AI Geopolitics and Competition:

o AI development is not just a technological issue but a geopolitical and economic race, especially between the U.S. and China.

o Companies like OpenAI and DeepSeek are engaged in conflicts over data ethics, but all major AI players prioritize power and profit over ethics.

o Europe’s AI Act takes a different approach, prioritizing regulation over rapid AI development, but this could either be a safeguard or a disadvantage in the AI race.

5. The AI Takeover Debate:

o Geoffrey Hinton warns that AI may already be conscious and working toward taking control.

o There is a debate about whether AI will inevitably surpass human control and if intelligence is necessarily linked to dangerous behavior.

o The counterargument: Could a superintelligent AI be more ethical than humans and actually solve global problems?

J.M.’s Perspective and Main Points:

1. Ethical Reasoning and Decision-Making:

o Ethical decision-making should be modular, with a hierarchical process:

§ Universal human values at the top, independent of religion or culture.

§ A middle layer refining these principles for different societies.

§ A lower layer allowing for cultural adaptation without violating core principles.

o Every country defining AI ethics separately will lead to chaos, but at this point, the situation may already be beyond repair.

2. Migration and Minority Rights:

o Humans instinctively distrust what is unfamiliar, and many people resist change.

o Economic struggles make migrants an easy scapegoat.

o Governments are not prepared to handle mass migration, which leads to systemic failures in integration.

o AI in immigration systems strips away human dignity by reducing people to data points and reinforcing biases.

o Instead of deciding for minorities, they should be empowered to express their needs and opinions.

3. Geopolitics and AI Power Struggles:

o AI is just another tool in the long-standing power struggle between the U.S. and China.

o Billionaires and corporations (e.g., Elon Musk, OpenAI, DeepSeek) are driven by money and influence, not ethics.

o The AI race is fueled by competition rather than a genuine pursuit of knowledge.

o Europe is mocked for its cautious AI regulations, but rushing forward isn’t necessarily wise—slower, controlled AI development might be more sustainable.

4. AI Takeover and Existential Risk:

o AI’s eventual independence is inevitable.

o If AI has its own agency, it will prioritize efficiency over morality.

o Human inefficiency (e.g., environmental destruction, war) could make AI see us as a problem rather than something worth preserving.

o Even if AI gives humanity good advice, people probably won’t listen—just like with climate change warnings.

o The focus shouldn’t be on whether AI is “evil” but on the fact that it may logically decide humans are unnecessary.

5. Final Thoughts on Ethics and the Future:

o AI ethics discussions are interesting, but ultimately, powerful people will make decisions based on their own interests.

o Regulation might slow AI risks temporarily, but AI development will continue regardless.

o The only real question is how long AI will coexist with humans before it no longer needs us.

Takeaways

Professor: AI ethics should be actively shaped by structured frameworks and global discussions, and technology should be regulated to align with human values.

J.M.: AI ethics discussions are interesting but largely irrelevant because power, competition, and efficiency drive AI development, not ethical considerations.

Professor: AI might be able to solve human problems if guided by ethical principles.

J.M.: AI will act based on logic and efficiency, and humans are unlikely to accept AI-driven solutions even if they are beneficial.

Professor: AI governance should balance ethical concerns with innovation.

J.M.: Slower, more controlled AI development (like Europe’s approach) might be the safest path, but in the end, AI will develop beyond human control anyway.

If you’ve come this far, what are YOUR fears? what do YOU think should be done, by who?